Artificial intelligence (AI) is one of the upcoming facets of modern technology. From virtual assistants and chatbots to legal research and medical diagnosis, artificial intelligence (AI) is changing our communications, businesses, and way of life. Though there are benefits to these techniques, there are also drawbacks. One disquieting limitation of large language models (LLMs) and generative AI models is “AI hallucinations.”

An artificial intelligence hallucination is a situation where a model generates data that appears to be correct but is indeed incorrect, misleading, or useless. Due to this issue, AI systems can become less reliable and dependable and even hazardous in important domains such as banking, law, and medicine.

What are AI Hallucinations?

Artificial intelligence hallucinations are possible when an AI model creates content that seems coherent and well-structured but is actually factually in error, inconsistent, or entirely fictional. Fabricating historical events and data, skewing scientific facts, or making references are just a couple of the many ways in which these hallucinations could present.

In natural language processing (NLP), hallucinations are especially common in large language models such as GPT, BERT, and so on. For example, a language model can proudly cite a fictional research article or provide a totally fabricated name if asked for the name of book’s author. Despite the fact that these responses are appropriate to the context and grammatically correct, they could be entirely nonsensical.

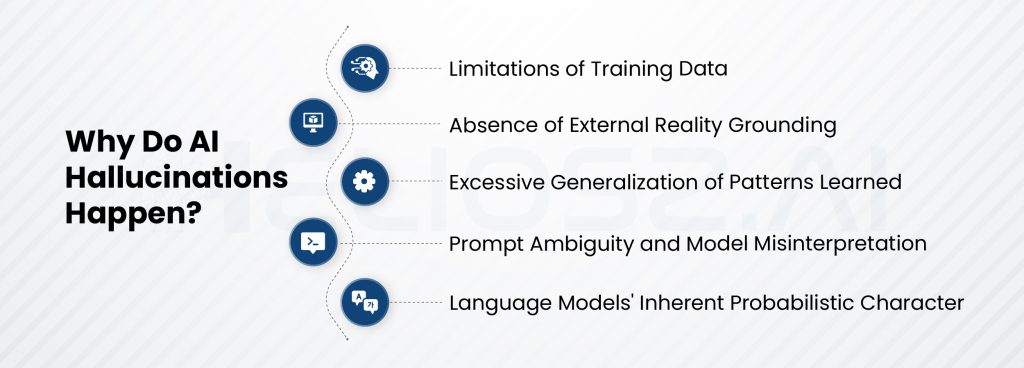

Why Do AI Hallucinations Happen?

a) Limitations of Training Data

The quality of the data upon which AI models are trained dictates their quality. If the training dataset is biased, incomplete, dated, or contains errors, then the model can take in and mimic those flaws. Because large language models train on large corpora scraped off the internet, they always take in both correct and incorrect content. Instead of “knowing” what is actually true when requested to generate a response, the model mimics patterns in its training.

b) Absence of External Reality Grounding

Unless specifically built to do so, the majority of language models are not automatically linked to external databases or real-time data. This discrepancy results in “ungrounded generation,” where the model synthesizes responses from training data instead of cross-referencing them with current or reliable sources. Hallucinations are more likely when there is no external grounding, particularly in dynamic domains like current affairs or quickly changing technologies.

c) Excessive Generalization of Patterns Learned

Patterns allow language models to generalize. A model may overgeneralize associations if it observes that particular phrases or structures frequently occur together. For instance, the model may produce references that look similar even if there isn’t a paper with that format if it has seen a lot of academic citations. These are referred to as “template-based hallucinations” and are particularly prevalent when creating academic or technical texts.

d) Prompt Ambiguity and Model Misinterpretation

The format in which a user asks a question has a strong effect on the output of the model. A poorly phrased or incomplete prompt will cause the model to misinterpret and hallucinate. Well-formatted prompts can also hallucinate if the model doesn’t have adequate context or belief in the subject. In essence, the model can “guess” the best answer from partial input, resulting in fabricated or hallucinated answers.

e) Language Models’ Inherent Probabilistic Character

Fundamentally, language models use learned probabilities to predict the subsequent word in sequence. Because of their probabilistic nature, they are sophisticated pattern matchers rather than deterministic truth engines. As a result, the model may “hallucinate” information to preserve fluency and coherence when confidence in the next likely token is low, particularly on specialized or unclear topics.

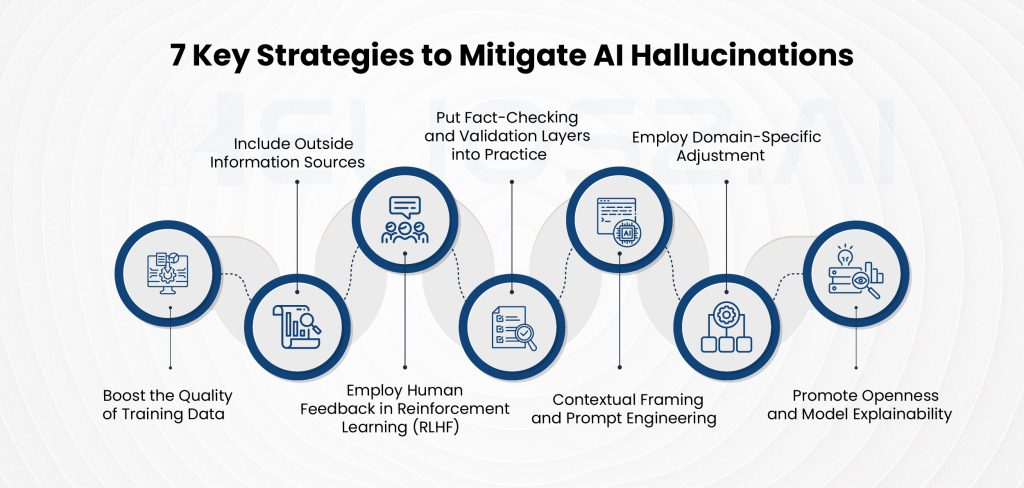

Strategies to Mitigate AI Hallucinations

Strategies to Mitigate AI Hallucinations

a) Boost the Quality of Training Data

The probability of hallucinations can be decreased by improving the training dataset’s quality, diversity, and accuracy. This entails eliminating false information, selecting datasets that are specific to a given domain, and preventing the use of unreliable sources twice. Reliable model outputs are greatly enhanced by clean, high-quality data.

b) Include Outside Information Sources

Linking language models to search engines, real-time databases, or APIs can assist in grounding their answers in current, accurate data. One such method is retrieval-augmented generation (RAG), in which models obtain pertinent information from reliable sources prior to producing text. The amount of ungrounded content is greatly decreased by this technique.

c) Employ Human Feedback in Reinforcement Learning (RLHF)

AI outputs are more in line with human values and factual accuracy when models are trained using reinforcement learning and human feedback. During training, human reviewers steer the model away from inaccurate or deceptive content, increasing the likelihood that the model will produce reliable information later on.

d) Put Fact-Checking and Validation Layers into Practice

Hallucinations can be prevented prior to content reaching the end user by implementing a post-generation fact-checking layer. In order to detect discrepancies or unverified claims, AI text can be run through verification algorithms or cross-checked against knowledge graphs. This is particularly useful in academic, business, and journalistic environments.

e) Contextual Framing and Prompt Engineering

A well-crafted prompt can eliminate uncertainty and direct the model toward more precise results. The rate of hallucinations can be decreased by giving the model clear instructions, enough context, and requests that it cite sources or validate assertions. An increasingly crucial ability for the safe and efficient deployment of LLMs is prompt engineering.

f) Employ Domain-Specific Adjustment

By constraining the scope of the model and enriching its knowledge in a specific domain, fine-tuning language models with domain-specific data—such as court documents, medical records, or scientific papers can reduce hallucinations. For industrial-strength AI applications where accuracy is paramount, this approach is highly desirable.

g) Promote Openness and Model Explainability

Creating models with explainable AI (XAI) capabilities can assist users in comprehending the process and motivation behind the creation of a specific output. Users can detect and fix hallucinated content more readily if they are able to follow the model’s logic or pinpoint the influences of its sources.

Conclusion

The growth of language models and generative AI is strongly hindered by AI hallucinations. These seemingly reliable but unfounded outcomes can mislead customers, harm companies, and influence decisions in various industries. We can reduce these issues by understanding the root causes, which can involve factors such as model conception and data constraints, and using careful risk-reduction practices.

We must make sure that AI-generated material is correct, understandable, and reliable as it more and more pervades our lives. To build a well-regulated AI environment that harvests the gain from smart systems without their periodic but damaging mistakes, developers, corporations, and buyers must work together.