Large Language Models (LLMs) like GPT-4, Claude, and Gemini have revolutionized artificial intelligence with their ability to understand and generate human-like text. But a crucial question remains: Can LLMs truly reason?

In this ultimate guide to reasoning in LLMs, we’ll explore what reasoning means in the context of language models, what are the types of reasoning, and the techniques that are pushing the boundaries of AI reasoning.

What is Reasoning in LLMs?

Reasoning refers to the process of drawing conclusions or making decisions based on evidence or logic. In humans, it’s a core cognitive function. In LLMs, reasoning is a simulation of this ability, they infer patterns, make predictions, and perform complex logical tasks using massive pre-trained neural networks.

While LLMs do not “reason” like humans with mental models and intentions, they often mimic reasoning patterns due to their training on vast corpora of logical, structured, and problem-solving texts.

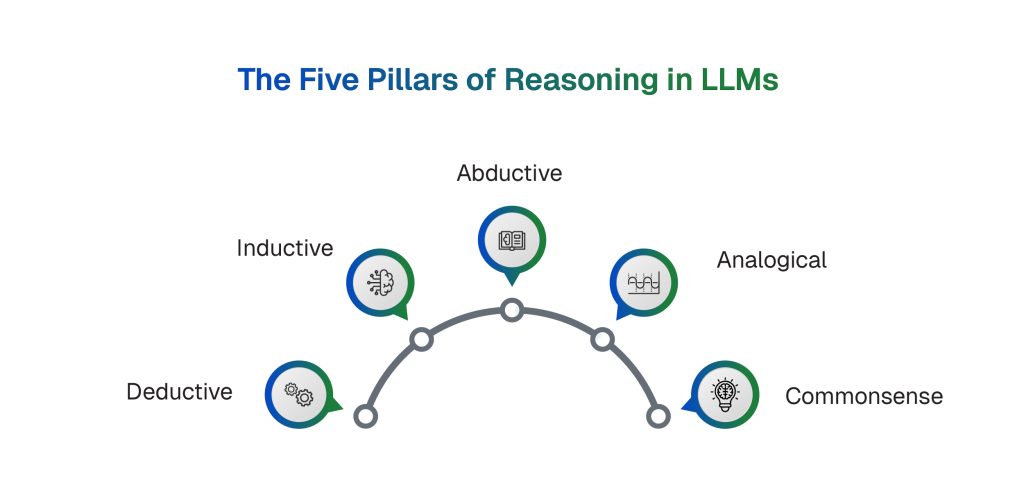

Types of Reasoning in LLMs

Large Language Models (LLMs) like GPT-4 and Claude exhibit impressive capabilities when it comes to simulating human-like reasoning. While they don’t truly “think” in the way humans do, LLMs can replicate many types of reasoning patterns through their training on vast textual data. Below are the major types of reasoning observed in LLMs, each playing a crucial role in different AI tasks.

Deductive Reasoning

Deductive reasoning involves deriving specific conclusions from general rules or premises. It is the most logical and structured form of reasoning, often used in mathematics, philosophy, and programming. For example, if an LLM is given the premises “All birds have feathers” and “A penguin is a bird,” it can deduce that “A penguin has feathers.” In this context, LLMs perform deductive reasoning by identifying logical implications embedded in the language. This type of reasoning is particularly useful in rule-based decision-making, coding tasks, and legal document analysis, where precision and consistency are essential.

Inductive Reasoning

Inductive reasoning refers to making generalized conclusions based on specific examples or patterns. It’s the reasoning behind forming hypotheses and making predictions. For instance, if an LLM is told that “John went running every morning last week,” it might conclude that “John will probably go running tomorrow.” Unlike deduction, which guarantees certainty if the premises are true, induction only offers probabilistic conclusions. Since LLMs are inherently statistical models, they are especially well-suited for inductive reasoning, which aligns with their pattern-recognition nature. This makes them excellent at tasks like trend forecasting, summarization, and content generation.

Abductive Reasoning

Abductive reasoning involves inferring the most likely explanation from incomplete information. It’s the type of reasoning people use when forming a hypothesis based on observations. For example, if an LLM sees “The streets are wet,” it might conclude that “It probably rained.” This reasoning is neither deductively valid nor inductively guaranteed, but it’s useful for forming plausible hypotheses. LLMs often use abductive reasoning in creative writing, question answering, and even in diagnostic tools, such as suggesting causes for technical errors or health symptoms based on context clues. It adds a layer of inference that allows the model to “guess” explanations when data is sparse.

Analogical Reasoning

Analogical reasoning allows LLMs to draw comparisons between similar concepts or situations to solve new problems. This involves identifying structural similarities rather than surface-level traits. For instance, if a model understands that “A battery is to a flashlight as gasoline is to a car,” it can reason that both pairs represent a power source to a device. Analogical reasoning is common in human learning and is a powerful cognitive shortcut. LLMs mimic this by finding linguistic parallels in training data, making it useful in educational tools, metaphoric writing, and abstract problem-solving.

Commonsense Reasoning

Commonsense reasoning involves making everyday assumptions about how the world works — knowledge that humans take for granted. For example, knowing that “If you drop a glass, it might break” or “People usually sleep at night.” LLMs simulate commonsense reasoning by learning from vast corpora that embed cultural, physical, and social norms. This type of reasoning is critical for maintaining coherence in conversations, understanding implicit context, and answering everyday questions. While LLMs have improved in this area with datasets like ATOMIC and SocialIQA, true commonsense reasoning remains a challenging frontier due to its nuanced, unstated nature.

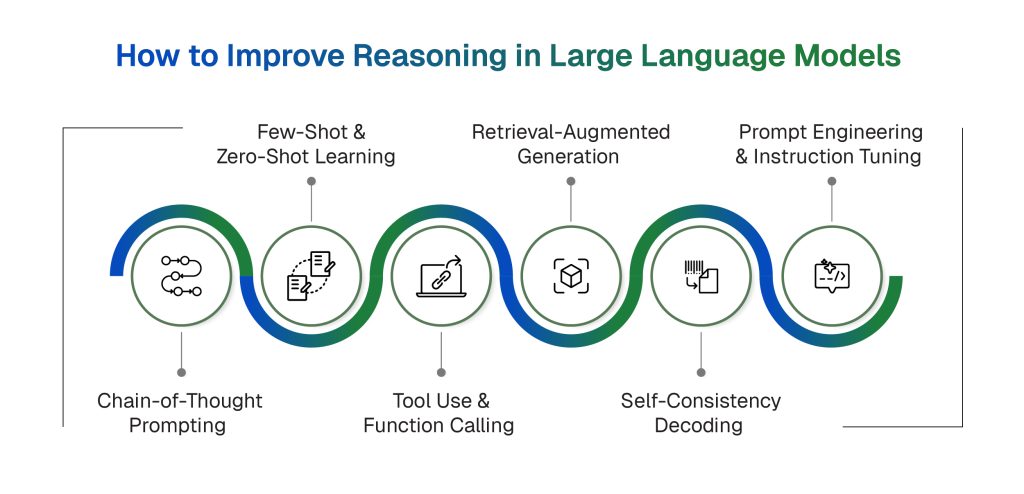

Techniques to Enhance LLM Reasoning

While Large Language Models (LLMs) demonstrate impressive reasoning abilities, their effectiveness heavily depends on how they’re prompted and integrated into applications. Researchers and developers have discovered several techniques that significantly enhance the reasoning capabilities of LLMs, allowing them to tackle more complex, nuanced, and accurate tasks. Below are the key techniques used to boost LLM reasoning performance.

Chain-of-Thought Prompting

Chain-of-thought prompting is a method that encourages the model to reason step-by-step before arriving at a final answer. Instead of jumping straight to a conclusion, the LLM is guided to generate intermediate reasoning steps that lead logically to the outcome. For example, in solving a math word problem, prompting the model with “Let’s think step by step” can lead it to break down the problem, compute intermediate values, and then provide the correct result. This technique improves performance especially on tasks requiring logical deduction, arithmetic, or multi-step reasoning, and has been shown to outperform direct answer prompting in several benchmarks.

Few-Shot and Zero-Shot Learning

Few-shot learning involves providing the model with a few examples of the task within the prompt. These examples act as a template that the model can follow to generate correct answers. In contrast, zero-shot learning relies on carefully crafted instructions without any examples. Both methods leverage the LLM’s pretraining on massive text corpora, allowing it to generalize to new tasks without explicit fine-tuning. Few-shot learning is especially effective for tasks that benefit from context, like translation, text classification, or analogy solving, while zero-shot learning is useful when examples are unavailable or the prompt must remain concise.

Tool Use and Function Calling

Tool use is an emerging frontier in enhancing LLM reasoning by allowing models to call external functions, APIs, or tools such as calculators, databases, or web search engines. This approach extends the model’s capabilities beyond its training data and token limit. For example, when an LLM encounters a complex math expression or a live data query, it can invoke a function to compute the answer or fetch real-time information. This is the foundation of agent-based systems and platforms like OpenAI’s function calling or LangChain agents, where reasoning is distributed between the LLM and external utilities, significantly improving accuracy and reliability.

Memory and Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) enhances reasoning by giving the LLM access to external knowledge bases or document stores at inference time. Instead of relying solely on internal memory (which may be outdated or limited), the model retrieves relevant information using a search component and then generates an answer based on that context. This is particularly powerful for knowledge-intensive tasks like question answering, report generation, or customer support. It also supports dynamic reasoning, where the model can “look up” facts or case-specific knowledge on demand, making responses more accurate and context-aware.

Self-Consistency Decoding

Self-consistency decoding is a decoding strategy that involves sampling multiple reasoning paths (especially in chain-of-thought settings) and selecting the most consistent final answer. Instead of taking the first answer generated, this method evaluates several outputs and chooses the one that appears most frequently or logically robust. This technique is particularly effective in math and logic-heavy tasks, reducing variability and hallucinations. By leveraging the statistical nature of LLMs, self-consistency enhances reasoning reliability without changing the underlying model.

Prompt Engineering and Instruction Tuning

Prompt engineering refers to the art of crafting instructions that effectively guide the LLM to reason accurately. Subtle changes in phrasing, formatting, or structure can have a significant impact on model output. On a broader scale, instruction tuning is a fine-tuning process where the model is trained on a curated dataset of task instructions and high-quality completions. Both methods help align LLM outputs with user intent, improving interpretability and consistency. These techniques are crucial for making models more controllable and reliable in reasoning-intensive use cases.

Heliosz: Next-Gen Agentic and Generative AI Solutions for Enterprises

Heliosz delivers next-generation agentic and generative AI solutions tailored for enterprise needs. Our systems are built to reason, adapt, and take action, enabling businesses to achieve greater efficiency, smarter decision-making, and sustainable growth. From enhancing customer interactions to streamlining operations and driving innovation, Heliosz provides AI solutions that create measurable impact at scale.

Final Thoughts

Reasoning in LLMs is not magic — it’s the outcome of powerful pattern recognition, guided prompting, and vast data exposure. As techniques like chain-of-thought prompting, tool integration, and retrieval augmentation mature, LLMs are getting closer to general-purpose reasoning agents.

Understanding how reasoning works in LLMs is essential for developers, researchers, and businesses leveraging AI in mission-critical scenarios.

If reasoning in LLMs feels overly technical or complex, Heliosz brings together a team of experts who design and implement GenAI and agentic AI solutions tailored to your business operations.

Whether you’re reimagining customer experiences, optimizing operations, or creating new revenue streams, Heliosz is your partner in building AI that truly transforms the enterprise.

Let’s start the successful AI journey together!